Science Highlights, March 5, 2014

Capability Enhancement

Efficient multi-GPU computation

High energy neutron computed tomography at LANSCE

First successful shot of PHELIX at the Proton Radiography facility

Materials Physics and Applications

Interplay between frustration and spin-orbit coupling in vanadates

Capability Enhancement

Improved plutonium assay

A team in Chemistry Division has achieved a major milestone in the high Resolution X-ray (hiRX) Improved Plutonium (Pu) Assay project by demonstrating direct detection of ~ 40 ng of plutonium in a real nuclear spent fuel specimen, using a breadboard hiRX instrument.

The research aims to develop a novel X-ray based method for improved Pu assay for safeguards applications, monitoring spent fuel reprocessing plant processes, and enabling more accurate Pu accounting. This method has the advantage of direct measurement of actinides. Current methods are indirect and employ significant corrections to arrive at a Pu value with an appreciable level of uncertainty. The hiRX detection limit is below the current detection limits of the conventional measurement techniques such as hybrid K-edge, gamma spectroscopy, neutron counting etc., within a 100 second acquisition time. The other methods must collect data for a much longer time to make such measurements.

Figure 1. hiRX elemental map of 40 ng Pu distribution in a real nuclear spent fuel sample containing fission product elements. Acquisition time per point was 10 sec over a 3x3 mm area with a 200-micrometer X-ray spot.

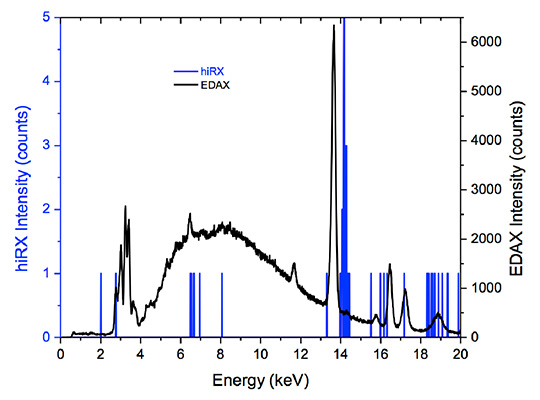

Figure 2. Overlay plots of hiRX (blue) and Energy Dispersive X-ray Analysis (EDAX) (black) spectra of Pu in real nuclear spent fuel sample. EDAX is a type of MXRF. The hiRX spectrum was acquired for 100 Lsec, EDAX for 600 Lsec.

Los Alamos researchers include George Havrilla, Kathryn McIntosh, and Velma Montoya (Chemical Diagnostics and Engineering, C-CDE). Leah Arrigo (Pacific Northwest National Laboratory) provided the sample, and Evelyn Bond and Allen Moody (Nuclear and Radiochemistry, C-NR) prepared the sample. The DOE International Safeguards program, Next Generation Safeguards Initiative, NA24 (Johnna Marlow and Michael Browne, LANL Program Managers) funded the work. The research supports the Lab’s Global Security and Energy Security mission areas and the Science of Signatures science pillar. Technical contact: George J. Havrilla

Efficient multi-GPU computation

Shortest-path computation is a fundamental problem in computer science with applications in diverse areas such as transportation, robotics, network routing, and VLSI (very large scale integration) design. For instance, in transportation, solving the shortest path problem can be used to discover the fastest route between two points on a road map or to find a flight route between two airports with minimum total duration, while in network routing it can be used for the efficient routing of data between two computers in a computer network. Los Alamos researchers and collaborators have developed a new approach to solve the shortest-path problem for planar graphs. This approach exploits the massive on-chip parallelism available in today’s Graphics Processing Units (GPUs).

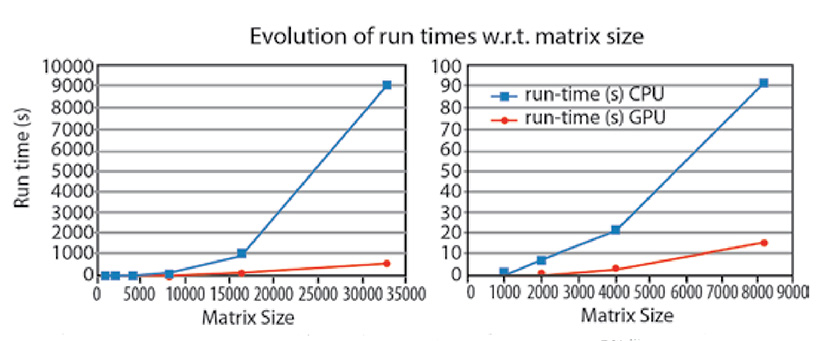

The team aims to increase the all-pairs shortest path (APSP) (the matrix of all distances between pairs of vertices) problem for planar graphs and graphs with small separators that makes use of the massive on-chip parallelism available in GPUs. By using the properties of planarity, the scientists applied a divide-and-conquer approach that enabled them to exploit the hundreds of arithmetic units of the GPU simultaneously. This advance resulted in more than an order of magnitude speed-up over the corresponding CPU (central processing unit) version. This research is part of their larger algorithmic work on finding efficient ways to parallelize unstructured problems, such as those found in complex networks and data mining, on highly parallel processors.

Figure 3. (Left): Run times with respect to input matrix size or the CPU and GPU versions. (Right): Expanded view of the first four data points.

The new algorithm enables faster processing of data, which is particularly useful in transportation and many other networks. The algorithm is also applicable to many other domains that do not directly involve solving routing problems, such as the analysis of complex networks. Such networks appear in many areas (social, biological, and technological), with sizes varying from thousands to billions of nodes. The varieties of networks have a common property of hidden community structure that can be discovered by analyzing the links of the networks. Communities correspond to parts of the network with densely interconnected nodes, while nodes in different communities are more sparsely connected. The community structure can be discovered through computing a network characteristic called “betweenness centrality,” which is a measure of each node’s importance in the network. It is defined as the fraction of all shortest paths that pass through that node. Solving the all-pairs shortest path problem is required to compute the betweenness centrality. Moreover, given the large sizes of some networks, the algorithm should be very efficient and able to run on modern parallel machines such as those based on GPUs.

Researchers include Hristo Djidjev and Sunil Thulasidasan (Information Sciences, CCS-3) and collaborators from INRIA/IRISA, University of Rennes, France. The scientists plan to present the work at the IEEE International Parallel and Distributed Processing Symposium in Phoenix, AZ.

The Laboratory Directed Research and Development (LDRD) program funded the Los Alamos Research, which supports the Lab’s Global Security mission area and the Information, Science, and Technology science pillar. Technical contact: Sunil Thulasidasan

High energy neutron computed tomography at LANSCE

Los Alamos researchers developed and demonstrated a unique high energy neutron Computed Tomography (n-CT) capability at LANSCE/ Weapons Neutron Research (WNR) on Flight Path 15R. This effort is a collaboration between the Non-Destructive Testing and Evaluation Group (AET-6), LANSCE Nuclear Science (LANSCE-NS), and Lawrence Livermore National Laboratory (LLNL).

Neutrons can complement X-rays for imaging due to their very different attenuation characteristics, which allow imaging of low atomic number (Z) materials through higher Z materials. For many years, most imaging has been performed with X -rays. However, X -rays are heavily attenuated when passing through dense materials, limiting the utility for imaging. This absorption increases strongly with atomic number, which hinders the ability of X -rays to view low-Z materials obscured by higher Z materials. Moreover, X -ray scattering and detection characteristics at high energies make measurement of density profiles and buried feature detection difficult.

For thin items, well-established low-energy neutron imaging capabilities are maintained at user facilities such as the National Institute for Science and Technology and Paul Scherrer Institute. However, higher-energy neutrons are required to penetrate thicker objects. The first high-energy neutron images were obtained in the mid 1990s by LANL and LLNL with imaging times on the order of 30-60 minute exposures per frame. However, these efforts were not used to develop a capability.

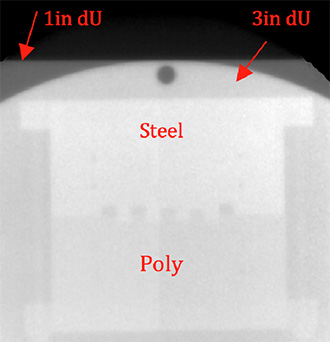

Figure 4. A steel (top half), high density polyethylene (bottom half) and foam (center teeth) phantom viewed through 76 mm of depleted uranium (dU). Some ~ 3 mm diameter holes in the steel are visible.

The Los Alamos and Livermore research collaboration has demonstrated a new high energy neutron imaging capability at LANSCE/WNR on flight path 15R. The team examined two examples to show the power of high-energy neutron radiography. Figure 4 shows a radiograph of a phantom behind 76 mm of depleted uranium. An 800-viewset experiment of a tungsten and polyethylene test object with 2 and 3 mm tungsten carbide BBs visible in the inner ring (Figure 5) was taken for CT reconstruction using the WNR neutron source. Each view was acquired in less than one minute.

Slices taken from that reconstruction (Figure 5 b-c) can be directly compared with an X-ray CT slice of the same object (Figure 5d). Neutron imaging shows the internal structure of the object, but the X-ray imaging does not show the same detail.

Figure 5. Three-dimensional reconstruction of the neutron CT experiment (a). Slices taken from the reconstruction (b and c) illustrate the details that can be observed. A similar slice (d) obtained using a 15 MeV X-ray source (Inner ring removed to reduce thickness and no BBs) shows the comparative advantage of neutron imaging (c) for low Z materials within high Z structures.

Researchers include James Hunter (AET-6), Jim Hall (LLNL) and Ron Nelson (LANSCE-NS). The NNSA Enhanced Surveillance Campaign provided the primary funding for the work, and the Principal Associate Directorate for Technology and Engineering (PADSTE) supplied capability development funding via a Small Equipment Grant. The Readiness in Technical Base and Facilities (RTBF) program funds the LANSCE accelerator and neutron sources. This work supports the Lab’s Nuclear Deterrent and Global Security mission areas and the Science of Signatures and Nuclear and Particle Futures science pillars. Technical contacts: James Hunter and Ron Nelson

First successful shot of PHELIX at the Proton Radiography facility

Researchers executed the first pulsed-power driven experiment diagnosed by LANL’s Proton Radiography (pRad) facility at LANSCE. They used the Precision High Energy-density Liner Implosion eXperiment (PHELIX), an air-insulated capacitor bank that can provide > 400 kJ of stored energy generating peak load currents of > 5 MA to implode centimeter-size liners in 10 – 40 µs, attaining speeds of 1 – 4 km/s. PHELIX is transportable with a footprint of only 8 x 25 ft2 and can be positioned in the pRad beam-line allowing multi-frame imaging along the experiment’s central axis. To achieve the desired peak current with a compact pulse-power system, PHELIX employs a two-stage Marx generator whose the output is connected to a secondary, current step-up toroidal transformer. Current from the secondary flows along annular transmission plates to a central “cassette” that contains the liner load. PHELIX experiments are self-confined – no vessel or catch tank is necessary.

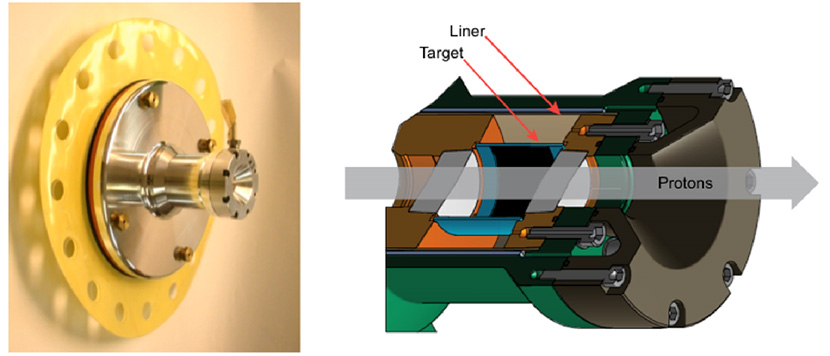

Figure 6. The PHELIX experimental cassette for the pRad experiment. (Left): Photo shows the assembled cassette prior to installation into the transformer. (Right): A cut-away schematic with the liner, target and proton trajectory indicated. The black region on the inside of the target represents the coating of tungsten particles. The initial outer diameter of the liner and target are 5.5 cm and 3 cm, respectively. The 2-cm diameter aluminum windows define the field of view of the proton radiographs.

For the initial pRad experiment, the researchers fielded a liner-on-target load configuration with micron size tungsten particles coating the inside surface of the target. Figure 6 shows the liner load cassette and a cut-away schematic of the aluminum liner and target. The team adjusted the charge voltage to deliver a peak current of 3.7 MA to the liner. Upon triggering of the system, current flowed through the liner, causing it to implode and impact the target. This sent a shockwave through the target and ejected particles from the surface. The particles traveled ballistically to the center of the assembly. Twenty-one proton radiographs along the central axis and one X-radiograph perpendicular to the central axis imaged the implosion. Diagnostics measured the performance of the pulsed-power system including precision measurements (< 1%) of the current through the liner. All diagnostics provided 100% data return.

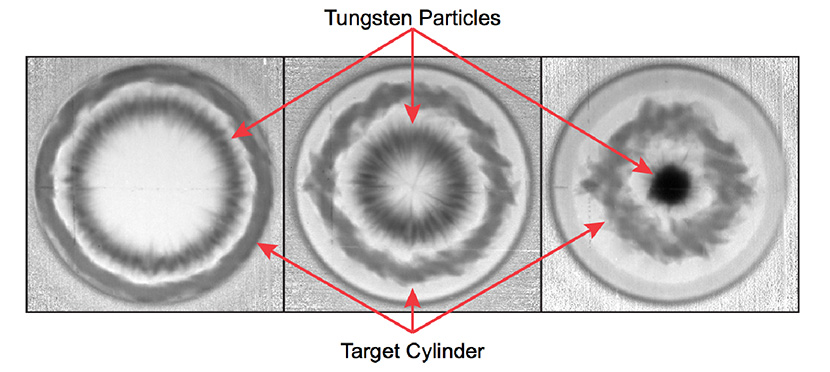

Figure 7. Three of the 21 proton-radiographs taken down the liner load central axis during the experiment. (Left to right): Images taken at approximately 30 µs, 34 µs and 39 µs after the start of current flow. In the first image the cloud of tungsten particles and target cylinder are in the field of view. In the second image, the fastest traveling particles have reached the center; in the third image, most of the tungsten particles have accumulated at the center.

Repeated tests of PHELIX with a static load established that the team achieved better than 1% current reproducibility for the same charge voltage. Adjustments of the charge voltage for each shot enables the researchers to tune the magneto-hydrodynamic (MHD) push on the liner to match their experimental needs. Using hydro-codes with MHD (e.g. Raven) enables prediction of the performance of the liner implosion for a given charge voltage.

Figure 7 depicts proton-radiographs taken during the experiment. The first image reveals the cloud of tungsten particles and the target cylinder in the field of view. Figure 8 shows the pre-shot and shot X-radiographs.

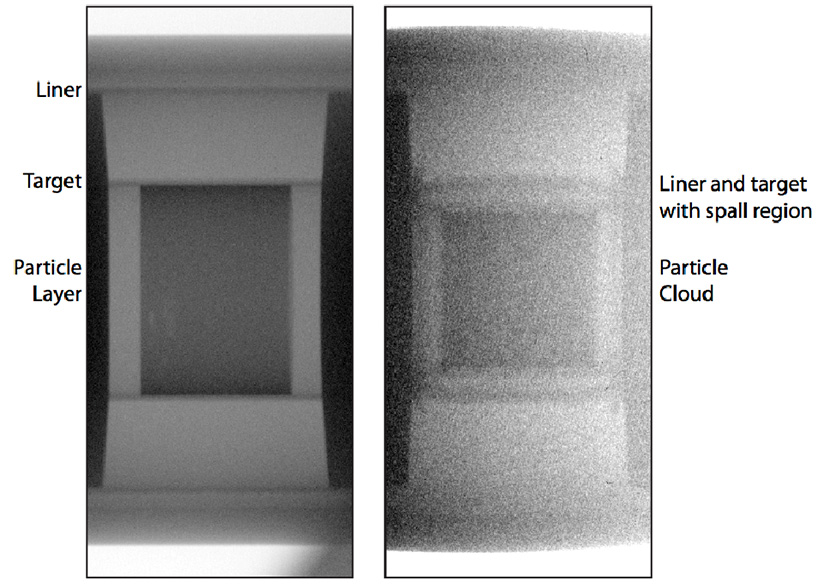

Figure 8. (Left image): Pre-shot X-radiograph. (Right image): Shot X-radiograph made approximately 26 µs after the start of current flow. The X-radiographs are recorded on storage phosphor image plates. The pre-shot X-radiograph was made in the absence of protons. The shot X-radiograph had a significant noise background as a result of the protons used for the proton-radiographs. The shot image was taken after the liner had impacted the target and had spalled.

Researchers are analyzing the data from this initial experiment and comparing it with model calculations performed using LANL hydro-codes. The PHELIX – pRad combination provides over five times the axial imaging data at higher spatial resolution in one experiment than was available previously at the Lab’s former Atlas pulse power facility.

The success of PHELIX is the result of close collaboration between Physics, X-Computational Physics, Accelerator Operations and Technology, and Materials Science and Technology divisions and National Security Technologies, LLC (NSTec). Participants include D. M. Oro, P. Nedrow, J. B. Stone, L. J. Tabaka, and J. L. Tybo (Neutron Science and Technology, P-23); C. L. Rousculp (Plasma Theory and Applications, XCP-6); W. A. Reass, A. J. Balmes, and J. M. Audia (RF Engineering, AOT-RFE); J. R. Griego (Plasma Physics, P-24); A. Saunders, J. D. Lopez, C. Morris, F. G. Mariam, B. J. Hollander, and C. J. Espinoza (Subatomic Physics, P-25); D. M. Baca (Mechanical Design Engineering, AOT-MDE); F. Fierro and R. B. Randolph (Polymers and Coatings, MST-7); R. E. Reinovsky (AD Weapons Physics, ADX); P. J. Turchi (Physics, P-DO); P. A. Flores and T. E. Graves (NSTec).

NNSA’s Science Campaign 1 funded the work, which supports the Lab’s Nuclear Deterrence mission area and the Science of Signatures and Nuclear and Particle Futures science pillars. Technical contact: David Oro

Chemistry

Silicone elastomers: from design to application

A Focus Exchange under JOWOG28 (Non-Nuclear Materials) took place at LANL for discussion and peer-review of all aspects of material development of silicone elastomers. Scientists and engineers from Lawrence Livermore National Laboratory, Honeywell’s Kansas City Plant, Los Alamos National Laboratory, and the Atomic Weapon Establishment met to share information, provide insights and solutions to problems encountered during the material’s design, development and application. For applied research it is important to understand the final requirements of the application to address modeling and characterization effectively at each sub-level. This approach ensures that researchers make appropriate technical investments to improve their understanding of materials and component behavior, system performance, and lifetime prediction. Silicone elastomers are very versatile and can be designed and fabricated to meet a range of requirements. However, all polymer are susceptible to damage and failure due to exposure to harsh environments – mechanical strains, radiation, thermal conditions, and aging. The product must survive in service for a specific period of time.

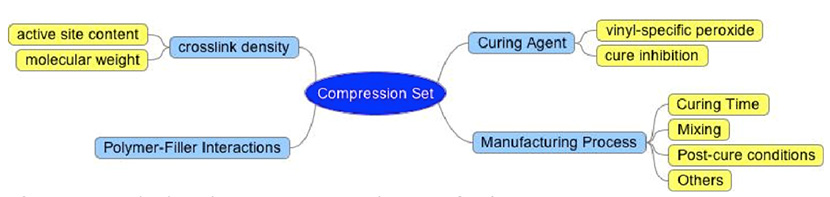

Figure 9. Methods to improve the compression set of polymers

The meeting attendees discussed different ways to tune the polymer’s properties to meet their goals and requirements. Compression set (permanent deformation of a material that has been under applied strain) is often a concern when conditions in service include compressive stress and temperature. High compression set implies that the material has experienced high deformation and loss of properties and/or performance. Therefore, low compression set is desired for most applications. Compression set can be improved by tuning the chemistry of the polymer, such as crosslink density (Figure 9). Crosslink density describes the network structure of the final polymeric product. It can be tuned by changing the molecular weight of the polymer and the amount of crosslinking sites (e.g. vinyl content).

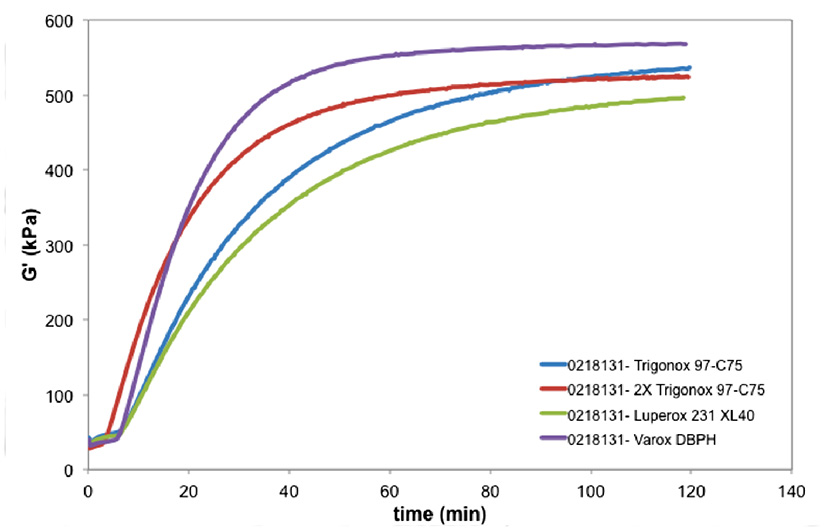

Figure 10. Curing profiles of four commercially available elastomers. (Modulus as a function of time). Varox DBPH produced a polymer with higher crosslink density. Increasing the amount of Trigonox increased the reaction kinetics but not the final modulus. Luperoc 231 XL40 produced the softer polymeric material.

The curing agent should be chosen for the desired chemical reaction. The manufacturing process should always be included as part of the design of experiments. It is important to understand what happens to the polymer during mixing, curing and post-curing to improve the manufacturing procedure. When chemistry and engineering come together, a better product can be designed and fabricated.

The next Focus Exchange- JOWOG28 meeting in March 2014 will include updates on material characterization, mechanical tests, curing behavior, detailed documentation on manufacturing process, material compatibility, and material models for lifetime performance prediction.

The B61 Life Extension Program (B61 LEP) funded this work, which supports the Lab’s Nuclear Deterrence mission area and the Materials for the Future science pillar. Technical contacts: Denisse Ortiz-Acosta (Chemical Diagnostics and Engineering, C-CDE) and Partha Rangaswami (Advanced Engineering Analysis, W-13)

Computer, Computational and Statistical Sciences

Probing the engine of core collapse supernovae

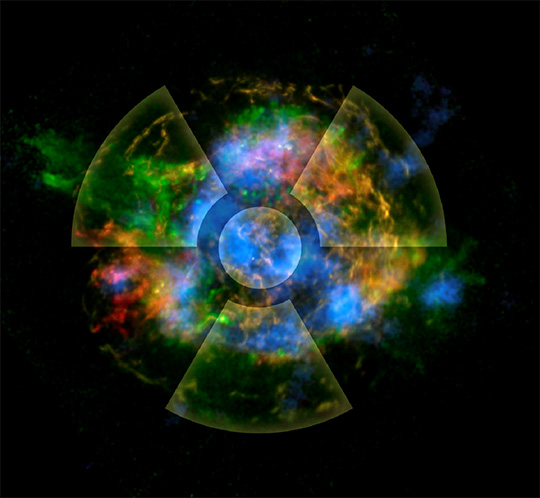

In 2012, NASA launched the Nuclear Spectroscopic Telescope Array (NuSTAR) satellite, which was designed to map the X-rays in the universe with energies lying between 3 and 80 keV. This wavelength range includes the decay lines of radioactive titanium-44 (44Ti), an element produced in the inner core of a supernova engine. One of NuSTAR’s primary goals is to map the 44Ti distribution in the nearby Cassiopeia A supernova remnant. The journal Nature has published the findings from the observations.

Chris Fryer (CCS-2, Computational Physics and Methods) worked with Gabe Rockefeller and post-doc Carola Ellinger (CCS-2) to lead the theoretical interpretation of the observations, taking advantage of LANL’s institutional computing resources and expertise in computational science to conduct detailed three-dimensional (3-D) simulations of the supernova explosion forming the Cassiopeia A remnant and its subsequent evolution.

Both nickel-56 (56Ni, which decays to iron) and 44Ti are produced at the core of a supernova engine, where temperatures and densities exceed 500 keV. In supernova remnants, scientists can only observe iron when it is excited by shocks. This produces a partial picture of the nature of the explosion that had led observers to argue for increasingly exotic models to explain this remnant. By observing the decay lines of 44Ti, the NuSTAR team was, for the first time, able to probe the true nature of the Cassiopeia A supernova explosion. The researchers found that the distributions of 44Ti and iron are not spatially collocated. Earlier studies of iron had missed most of the inner supernova ejecta. The new observations rule out a symmetric explosion and instead indicate that low-mode convection is required to explain the supernova explosion. The NuSTAR observations reassert that Cassiopeia A had a standard core-collapse engine, which is produced when the core of a massive star implodes.

Figure 11. Distribution of observed elements in the Cassiopeia A remnant: shocked silicon (green), shocked iron (red), and 44Ti (blue). The asymmetries in the 44Ti are set by the turbulence near the collapsed stellar core. High performance computing (HPC) clusters created the image.

Reference: “Asymmetries in Core-collapse Supernovae from Maps of Radioactive 44Ti in Cassiopeia A,”

Nature 506, 339 (2014); doi: 10.1038/nature12997. Chris Fryer (CCS-2) and collaborators from the California Institute of Technology, University of California – Berkeley, North Carolina State University, NASA Goddard Space Flight Center, University of Texas – Arlington, Durham University, McGill University, Universite´ de Toulouse, CNRS, Technical University of Denmark, Lawrence Livermore National Laboratory, Agenzia Spaziale Italiana, Columbia University, RIKEN, Kavli Institute for Particle Astrophysics and Cosmology, SLAC National Accelerator Laboratory, and INAF – Osservatorio Astronomico di Roma authored the paper. More information and an interview with Aimee Hungerford (XTD Integrated Design and Assessment, XTD-IDA): http://int.lanl.gov/science/science-highlights/_assets/media/super-nova.html

Laboratory Directed Research and Development (LDRD) funded the Los Alamos portion of the research. Fryer used the SNSPH code, a parallel 3-D smoothed particle radiation hydrodynamics code, which was developed at Los Alamos. SNSPH is used to test algorithms for RAGE. He ran the SNSPH simulations on the Lab’s Conejo and Mustang supercomputers. This work ties to research in NNSA’s Advanced Simulation and Computing (ASC) program and the Lab’s Nuclear Deterrence mission area by demonstrating LANL’s capabilities in computational physics and by developing new parallel algorithms. The work supports LANL’s Nuclear and Particle Futures and Information, Science, and Technology science pillars. Technical contact: Chris Fryer

Earth and Environmental Sciences

Understanding how trees die

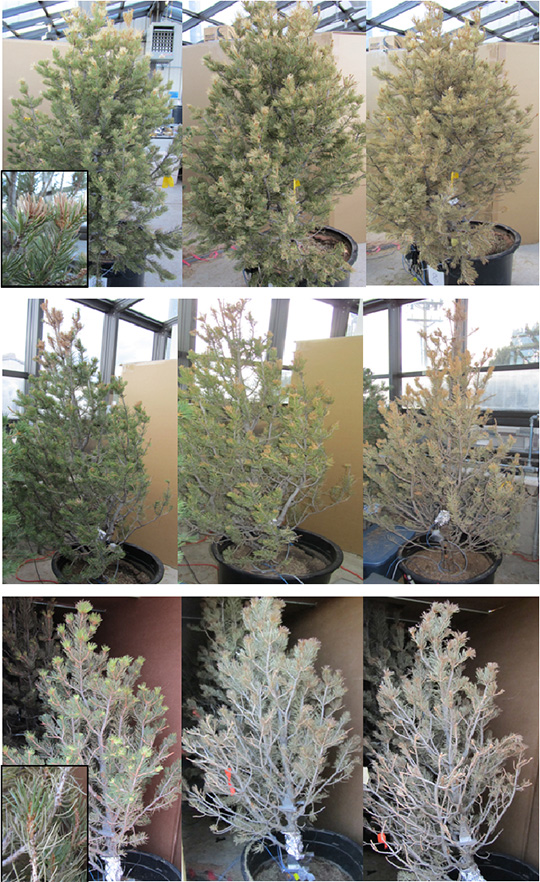

Drought-related, continental-scale forest mortality events have been observed with increasing frequency during the past 20 years in all major vegetation zones. Despite decades of research on plant drought tolerance, the physiological mechanisms by which trees succumb to drought are still under debate. The mechanism of mortality may impact plant survival rates during droughts of different duration and severity. Therefore, this knowledge gap is one of the major sources of uncertainty in predicting vegetation changes and their feedbacks to climate. Los Alamos researchers and collaborators are examining the mechanisms of tree mortality. The journal Plant, Cell and Environment has published their results.

Current leading hypotheses of plant mortality mechanisms are based on scientists’ understanding of plant water relations. Hydraulic failure is expected to occur when water loss from transpiration is sufficiently greater than uptake by roots, resulting in bubble formation and progressive loss of conductivity in the vascular tissue. Conversely, carbon starvation is hypothesized to result from avoidance of hydraulic failure through stomatal (pore) closure, causing sustained negative carbon balance. Carbon starvation may be a slow process, affecting plants that close their stomata relatively early during drought, and leading to mortality during long drought periods that cause prolonged periods without net photosynthesis. Hydraulic failure, on the other hand, is expected to proceed more rapidly leading to fast mortality in plants that keep their stomata open during drought.

Photo. Images showing progression of needle response to drought and shade treatments, from initiation of response (left) to death (right). Top, middle, and bottom panels show fast-dying drought, slow-dying drought, and shade trees, respectively. Insets show that drought tree branches lose 1-year-old, most vital needles (top panel). This result is first consistent with hydraulic failure. Shade tree branches lose old needles first to conserve carbohydrates while retaining the most vital needles as long as possible (bottom panel). Mortality of slow-dying drought trees initiated like hydraulic failure, but converted to resemble carbon starvation towards the end.

To shed light on how these hypothesized mechanisms lead to mortality and how plant survival time could be predicted from physiological changes or traits, a team of researchers from Earth System Observations (EES-14) performed a greenhouse experiment where they exposed mature piñon pine trees to severe drought (full light; no irrigation), shade (no light, daily irrigation) and control treatments. The scientists expected that the drought treatment would cause mortality via hydraulic failure, while shade treatment would prevent any photosynthesis and lead to carbon starvation.

The results highlight the complexity of water-carbon interactions in plant mortality: trees of same species and similar size can survive severe drought from two months up to almost a year and die in very different manners (Photo). What controls this remarkable span in survival time remains unclear. This study shows that osmoregulation of cell turgor (rigidity due to fluid inside the cell) and water-related controls of carbohydrate storage use are the best predictors of survival time independent of mortality mechanism.

Reference: “How Do Trees Die? A Test of the Hydraulic Failure and Carbon Starvation Hypotheses” Plant, Cell and Environment (2013) doi: 10.1111/pce.12141. The coauthors include Sanna Sevanto, Nate McDowell, and L. Turin Dickman (EES-14); Robert Pangle and William Pockman (University of New Mexico).

The DOE – Office of Science, Biological and Environmental Research and Laboratory Directed Research and Development funded different aspects of LANL’s work, which supports the Lab’s Global Security mission area and the Science of Signatures science pillar. Technical contact: Sanna Sevanto

Materials Physics and Applications

Interplay between frustration and spin-orbit coupling in vanadates

Spin-orbit interactions do not normally play the leading role in determining the magnetic ordering of three-dimensional-based materials. However, in certain magnets the dominant magnetic interactions are frustrated, leaving spin-orbit interactions to provide an important role in selecting the ordering. Magnetic frustration happens when all the magnetic interactions in a system cannot be satisfied simultaneously. Understanding how to model the interplay between spin-orbit interactions, magnetism, and structure is important for an increasing number of frustrated materials because these materials have potential applications as sensors and in memory devices. Los Alamos researchers and collaborators have combined experiment and theory to understand frustration in the archetypal frustrated spinel compounds MV2O4 (M = Cd, Mg). Physical Review Letters published the findings.

Frustrated systems are at a tipping point where small external stimuli can push them from one magnetic configuration to another. This makes them very tunable and sensitive to external fields, and therefore useful for applications. The scientists studied materials that have spin frustration and orbital ordering that occurs on the same energy scale. (Electrons can produce magnetism either through their intrinsic spin, or by the fact that they orbit the nucleus of the atom). The orbital ordering adds an additional degree of complexity and is a challenge for theoretical description. However, it adds functionality because orbital magnetism couples to the structure of the material and to electrical properties. This enables creation of coupling between magnetism and structure, and between magnetism and electric polarization. The potential applications of this general area of research that couples magnetism to structure and to electrical properties include sensors, memories, high-frequency filters, tuners, resonators, energy harvesting, etc.

The team developed a model for the magnetic, orbital, and structural interactions. They conducted magnetization and electric polarization measurements in pulsed magnetic fields up to 65 Tesla at the National High Magnetic Field Laboratory at Los Alamos to chart magnetic field-induced multiferroic transitions and determine the high-field phase diagram.

Figure 12. H-T phase diagrams of CdV2O4 and MgV2O4 obtained from M(T, H) and ΔP(H) measurements. Abbreviations PM, PE, and FE are for paramagnetic, paraelectric, and ferroelectric state. The shaded area is the FE state, and the lined area represents a mixed PE-FE state due to the polycrystalline nature of CdV2O4.

Reference: “Magnetic Field Induced Transition in Vanadium Spinels,” Physical Review Letters 112, 017207 (2014); doi: 10.1103/PhysRevLett.112.017207. Los Alamos contributors include Eun Mun and Vivien Zapf (Condensed Matter and Magnet Science, MPA-CMMS), Gia-Wei Chern and Cristian Batista (Physics of Condensed Matter and Complex Systems, T-4). Coauthors are V. Vardo and F. Rivadulla (University of Santiago de Compostela), R. Sinclair and H. D. Zhou (University of Tennessee). Brian Scott (Materials Synthesis and Integrated Devices, MPA-11) provided single-crystal orientations. Scientists at the University of Santiago de Compostela and the University of Tennessee grew high-quality crystals for the research.

The Laboratory Directed Research and Development (LDRD) program funded the research at Los Alamos. The National Science Foundation, the DOE, and the State of Florida sponsor the National High Magnetic Field Laboratory – Pulsed Field Facility at Los Alamos. The work supports the Lab’s Energy Security and Global Security mission areas, and the Materials for the Future science pillar. Technical contact: Vivien Zapf

Materials Science and Technology

First atomic-scale study of natural radioactivity in human DNA

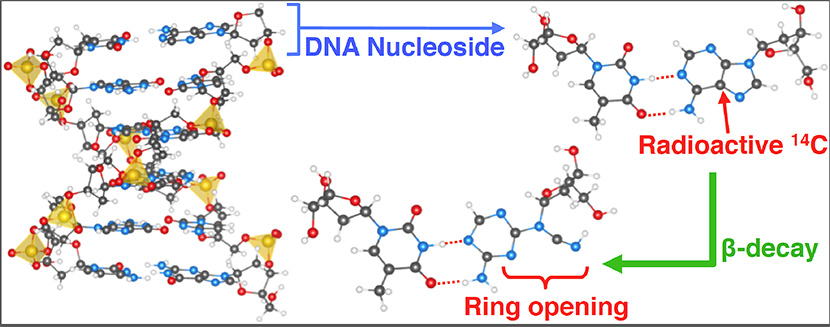

Carbon-14 (14C) is an important beta (β-) emitter because it is ubiquitous in the environment and an intrinsic part of the genetic code. Over a lifetime, around 50 billion 14C decays occur within human DNA. Curtin University and Los Alamos researchers used high-performance computers to examine how the transmutation of naturally occurring radioactive carbon atoms 14C into nitrogen-14 (14N) atoms impacts the stability of DNA molecules. About 20-30 of such transmutation events per second occur within the base pairs and sugar groups of DNA. The journal Biochimica et Biophysica Acta - General Subjects published the findings, and ScienceDaily, a website showcasing the world’s top science news stories, highlighted the research.

Figure 13. A schematic of the transmutation of 14C via beta (β-) decay in DNA.

The team applied ab initio molecular dynamics to quantify 14C -induced bond rupture in a variety of organic molecules, including DNA base pairs. They found that the recoil energy of the daughter 14N produced from 14C is sometimes sufficient to break chemical bonds. The results illustrate how the genetic apparatus accommodates the formation of a nitrogen atom. Double bonds and ring structures confer radiation resistance. These features, present in the canonical bases of the DNA, enhance their resistance to 14C -induced bond breaking. In contrast, the sugar group of the DNA and RNA backbone is vulnerable to single-strand breaking. The team showed that 14C decay provides a mechanism for creating mutagenic wobble-type mispairs.

This is the first time that researchers have examined natural radioactivity within human DNA on the atomic-scale. The observation that DNA has a resistance to natural radioactivity had not previously been recognized. The findings raise questions such as how the genetic apparatus deals with the appearance of an extra nitrogen in the canonical bases. It is not obvious whether or not the DNA repair mechanism detects this modification or how DNA replication is affected by a non-canonical nucleobase. The 14C may be a source of genetic alteration that is impossible to avoid due to the universal presence of radiocarbon in the environment.

References: “Carbon-14 Decay as a Source of Non-canonical Bases in DNA,” Biochimica et Biophysica Acta - General Subjects, 1840, 526 (2014); doi: 10.1016/j.bbagen.2013.10.003. Researchers include Michel Sassi, Damien J. Carter, and Nigel A. Marks (Curtin University); Blas P. Uberuaga and Chris R. Stanek (Materials Science in Radiation and Dynamics Extremes, MST-8).

“Radioactivity muddles alphabet of DNA,” ScienceDaily, Dec. 17, 2013, http://www.sciencedaily.com/releases/2013/12/131217104231.htm

The work is a spinoff of nuclear waste research funded by LANL’s Los Alamos Research and Development (LDRD) program. The team had developed a suite of computational tools to examine deliberate radioactivity in crystalline solid and the effect of transmutation on waste form stability.

The scientists extended these tools to natural radioactivity in molecules. The foundational work supports LANL’s Energy Security mission area and Materials of the Future science pillar. Technical contact: Chris Stanek