Science Highlights, January 22, 2014

Awards and Recognition

Gordon Bell Prize finalists at Supercomputing 2013

Two sets of Lab researchers were finalists for the Gordon Bell Prize, which was awarded during a special session at the Supercomputing 2013 Conference, the International Conference for High Performance Computing, Networking, Storage and Analysis. The ACM Gordon Bell Prize recognizes outstanding achievement in high-performance computing. The award tracks the progress over time of parallel computing, with particular emphasis on rewarding innovation in applying high-performance computing to applications in science, engineering, and large-scale data analytics. Prizes may be awarded for peak performance or special achievements in scalability and time-to-solution on important science and engineering problems. Gordon Bell, a pioneer in high-performance and parallel computing, provides financial support of the $10,000 award. ACM (Association for Computing Machinery) is the world’s largest educational and scientific computing society. The technology finalists from LANL are described in the following paragraphs.

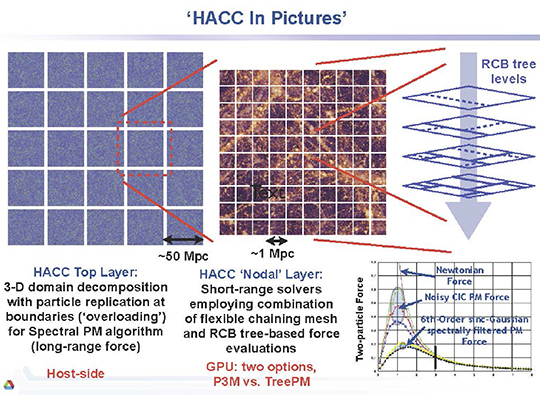

HACC: Extreme Scaling and Performance Across Diverse Architectures Supercomputing is evolving towards hybrid and accelerator-based architectures with millions of cores. David Daniel (CCS-7, Applied Computer Sciences) and Patricia Fasel (Information Sciences, CCS-3) were part of a team that described HACC (Hardware/Hybrid Accelerated Cosmology Code), which exploits this diverse landscape. The code has attained unprecedented levels of scalable performance on supercomputers at several national laboratories. The researchers presented performance results for full system runs on two of the world’s most powerful machines (Sequoia at Lawrence Livermore National Laboratory and Titan at Oak Ridge National Laboratory). Although the systems have very different architectures, HACC shows excellent performance and parallel efficiency on both.

Figure 1. Schematic of scalable performance with HACC.

The research aims to simulate the evolution of large-scale structure in the universe at a scale and dynamic range greater than previously achievable. The team developed an application infrastructure that adapts well to a variety of challenging computer architectures that are used in leading supercomputers today, and will become more common in the future. The scientists melded particle and grid methods using a novel algorithmic structure that flexibly maps across supercomputer architectures.

David Daniel designed and implemented the solver for the long-range gravitational force. He developed a highly scalable parallel fast Fourier transform that is essential to enable this approach for large cosmological simulations above the Petaflop level. Pat Fasel implemented parallel particle interchange, a critical part of application infrastructure necessary for the implementation and success of this project, and scalable data analysis algorithms.

The results demonstrate that the complex hierarchical supercomputer architectures of the future can be effectively harnessed for extreme-scale discovery science by choosing algorithms and software architectures that map well to each level of the hierarchy. The largest large-scale cosmology simulations ever achieved were performed between 10 and 20 Petaflop/s. In addition to Daniel and Fasel, researchers include Salam Habib, Vitali Morozov, Nicholas Frontiere, Hal Finkel, Katrin Heitmann, Adiran Pope, Kalyan Kumaran, Venkat Vishwanath, Tom Peterka, and Joseph Insley (Argonne National Laboratory); Taisuke Boku (University of Tsukuba); and Zarija Lukic (Lawrence Berkeley National Laboratory).

The research is applicable to a broad range of predictive and discovery multiscale and multiphysics supercomputer applications for LANL’s mission areas, and it supports the Laboratory’s Information, Science, and Technology science pillar. Technical contacts: David Daniel and Pat Fasel

An Improved Parallel Hashed Oct-tree N-body Algorithm for Cosmological Simulation Michael Warren (Nuclear and Particle Physics, Astrophysics and Cosmology, T-2) presented a computational and mathematical approach to the cosmological N-body problem. The paper reported the improvements made over the past two decades to the adaptive treecode N-body method (HOT). During the same timescale, cosmology has been transformed from a qualitative to a quantitative science.

Figure 2. The images show recent results from the Planck satellite compared with light-cone output from 2HOT. The numerical simulations are presented in the same HEALPix Mollewide projection of the celestial sphere that Planck used. (Top panel): The density of dark matter in a 69-billion particle simulation (upper left) compared with the fluctuations in the cosmic microwave background. The obvious difference in the upper panel is due to the imperfect removal of sources within our galaxy in the Planck data. The statistical measurements of the smaller details match precisely between the observation and simulation. (Bottom panel): The simulation compared with the gravitational lensing signal measured by Planck.

Cosmological simulations are the cornerstone of theoretical analysis of structure in the Universe from scales of kiloparsecs to gigaparsecs. Predictions from numerical models are critical to almost every aspect of the studies of dark matter and dark energy due to the intrinsically non-linear gravitational evolution of matter.

Warren described an approach to the cosmological N-body problem, which demonstrated performance and scalability measured up to 256k (218) processors. He discussed a suite of simulations to probe the finest details of our current understanding of cosmology. Warren presented error analysis and scientific application results from a series of more than ten 69 billion (40963) particle cosmological simulations, accounting for 4 x 1020 floating point operations. These results include the first simulations using the new constraints on the standard model of cosmology from the Planck satellite. The simulations set a new standard for accuracy and scientific throughput, while meeting or exceeding the computational efficiency of the latest generation of hybrid TreePM N-body methods. The speed and accuracy of the code has led to the award of a new INCITE project using the Titan supercomputer at Oak Ridge National Laboratory to study “Dark Matter at Extreme Scales.”

The project used resources provided by Oak Ridge Leadership Computing Facility at Oak Ridge National Laboratory and the National Energy Research Scientific Computing Center (both funded through the DOE Office of Science) and the Institutional Computing Program at LANL. The research supports the Lab’s Information, Science, and Technology science pillar. Technical contact: Michael Warren

Hsin-Chih Yeh honored with Postdoctoral Publication Prize

Hsin-Chih (Tim) Yeh of the Center for Integrated Nanotechnologies (MPA-CINT) received the Laboratory Postdoctoral Publication Prize in Experimental Sciences. The biennial prize is awarded for the best article in experimental sciences, published, or accepted for publication. Former Physics (P) Division Leader, Damon Giovanielli, created the prize in 1999 and funds it.

Yeh received the award for his paper, “A Silver DNA Nanocluster Probe that Fluoresces Upon Hybridization,” published in 2010 in Nano Letters. James Werner (MPA-CINT) mentored Yeh. According to Werner, Yeh's paper “represents an innovative new discovery and successful application/demonstration of a new nucleic detection probe.” These probes, termed nanocluster beacons, have distinct advantages over molecular beacon probes for the detection of nucleic acid targets and thus have a large potential impact in biotechnology. Yeh is now an assistant professor in the Biomedical Engineering Department at the University of Texas, Austin.

Gabriel Montaño elected president of SACNAS

Gabriel Montaño (Center for Integrated Nanotechnologies, MPA-CINT) is president-elect of the Society for Advancement of Hispanics/Chicanos & Native Americans in Science (SACNAS). Montaño assumed the post January 1 and will serve for four years: the first as president-elect, two years as president, and the fourth as past president. In 2006, the society honored him with a Presidential Service Award for leadership of the SACNAS Student Presentations Program. “I always say I’m a Chicano from Gallup, New Mexico since I’m proud of both,” he said.

Montaño holds a PhD in molecular and cellular biology from Arizona State University As a staff scientist in CINT’s soft, biological, and composite nanomaterials thrust area, Montaño explores ways to predict and control nano-bio interactions in natural and artificial membrane assemblies as a means to create new dynamic, energetic materials.James Boncella chairs Gordon Conference

James Boncella

James Boncella (Materials Synthesis and Integrated Devices, MPA-MSID) chaired the Gordon Conference on Organometallic Chemistry at Salve Regina University in Newport, RI. This conference is one of the premier conferences on organometallic chemistry in the world. It is held every year, being one of nine (out of 170) Gordon Research conferences that are held annually. The conference brings together chemists from academia, industry, and governmental laboratories to present new, unpublished results at the forefront of the field. Being at the interface of organic and inorganic chemistry, a wide range of topics was covered including homogeneous and heterogeneous catalysis, drug synthesis, and chemical transformations related to energy production. The chair of the conference is elected by participants and serves a two-year term, first as vice-chair and then as chair. This year’s meeting brought together 26 speakers from the U.S., Canada, and Europe, with the 175 participants coming from all continents worldwide.

A sister conference, the Gordon Research Seminar on Organometallic Chemistry, also met at Salve Regina University. This conference is organized and run by students or post-docs who are pursuing careers in the field. This year, the conference elected Los Alamos post-doc Neil Tomson (MPA-MSID) as vice-chair for 2014. He will be the chair of the 2015 Gordon Research Conference on Organometallic Chemistry. Technical contacts: James Boncella and Neil Tomson

Bioscience

Mechanism of drug transport across the cytoplasmic membrane

Drug delivery across the cytoplasmic membrane often involves an interaction of the drug with a membrane efflux system. One of the most well-studied efflux pump systems is AcrB, (Acridine resistance protein B), a 340 kilo-Dalton cytoplasmic membrane resistance-nodulation-cell division efflux pump that is a part of the AcrAB-TolC tripartite efflux system. This integrated three-component molecular complex extrudes a large variety of cytotoxic substances such as antibiotics, organic solvents, dyes, and detergents from the cell directly into the medium, bypassing the periplasm and the outer membrane. In an article in The Journal of Structural and Functional Genomics, researchers from LANL’s Physics and Bioscience divisions and the Tokyo Institute of Technology have determined the 3-D structure of AcrB with bound Linezolid, the first FDA-approved oxazolidinone antibiotic used to treat serious infections caused by Gram-positive bacteria that are resistant to other antibiotics.

Figure 3. Schematic diagram of AcrB-Linezolid complex. The Linezolid molecules are in colored space-filling model, viewable through one of the vestibule channels. The symmetric AcrB promoters are in ice-blue, coral, and gold ribbons. The 670-675 loops are shown in crimson tubes as depicted. Other components of the AcrB trimer are also labeled.

The team determined the crystal structure of an AcrB-Linezolid complex at a resolution of 3.5 Å. The structure shows that one Linezolid binds to the A385/F386 loop of each protomer in the symmetric trimer of AcrB. This loop has previously been shown to interact with other antibiotics such as Ciprofloxacin, Nafcillin, and Ampicillin. Researchers found a conformational change at the bottom of the periplasmic cleft that may be important for a periplasmic membrane fusion protein AcrA binding and drug transport.

Reference: “Crystal Structure of AcrB Complexed with Linezolid at 3.5Å Resolution,” The Journal of Structural and Functional Genomics 14, 71 (2013); doi: 10.1007/s10969-013-9154-x. Researchers include Li-Wei Hung (Applied Modern Physics, P-21), Heung-Bok Kim, Goutam Gupta, Chang-Yub Kim, and Thomas C. Terwilliger (Biosecurity and Public Health, B-10); and Satoshi Murakami (Tokyo Institute of Technology).

The Laboratory Directed Research and Development (LDRD) Program supported the LANL research for a Feasibility Studies Program for Study grant. The Next Generation World-Leading Researchers (NEXT Program), National Institute of Biomedical Innovation (NIBIO), and the Japan Science and Technology Agency supported Murakami’s work. Hung determined the crystal structure of the AcrB-Linezolid complex at the Berkley Center for Structural Biology at the Advanced Light Source (ALS). Technical contacts: Li-Wei Hung and Thomas Terwilliger

High Performance Computing

Design and deployment of the new Powerwall Theater

Imagine being able to see your critical data in three-dimensional, full color images on a movie theater size screen! This is what the Powerwall Theater (PWT) makes possible. The PWT is a wall of screens used to display images of computational equations. The wall is also used for presentations and real-time collaboration in a stereo environment. The new Powersall Theater was a multi-year project, led by the Advanced Simulation Computing (ASC) Production Visualization project (Laura Monroe, ASC Production Viz Project Lead, Dave Modl and Paul Weber (High Performance Systems Integration), and which includes divisions across LANL working together to create a beautiful and practical product that is used daily to enhance the Lab’s scientific work. As a world-class facility that differentiates the Laboratory, the PWT is often cited in proposals and reviews of LANL programs.

The projectors that formerly created the PWT were ten years old and in need of technical improvement. Therefore, the team took the opportunity to advance the technological capabilities of the theater during the upgrade. The former PWT had 24 screens and 24 projectors. The new one has 3 screens and 40 projectors. The new technology allows the 40 projectors to create one seamless image using inputs from the Viewmaster 2 Cluster, PCs and a document camera, and produces increased detail and more continuity in the images. The link shows a time lapse of the creation of the new PWT: http://int.lanl.gov/org/padste/adtsc/_assets/video/power-wall-lapse.html

For the first time, the PWT can be used for presentations from the Yellow Network. Previously, only data residing on the Red Network could be viewed. This is a great convenience because it allows users to access their open data sitting on the Yellow. They can also access the Internet during a presentation.

Photo. New Powerwall Theater display shows a global ocean simulation.

When someone wants to visualize simulation data created in the secure, they extract geometry on the original simulation platform (on Cielo, for example), and then send the geometry across the network to Viewmaster 2 for rendering. The Viewmaster 2 cluster uses various software, such as Ensight, to create images from the data. This ability to visualize large-scale simulated data has become an integral part of research performed at LANL.

The PWT upgrade is part of the overall upgrade to the LANL Visualization Corridor, which comprises the visualization computers, the software and hardware infrastructure supporting use of the computers, and the display systems. The Visualization Corridor upgrade began with the new computer cluster, Viewmaster 2. This is much more powerful than the previous visualization cluster, and it permits more functionality. Researchers can view their work from the desktop, using an extender that enables the display of video from Viewmaster 2 on their desktop monitor. The 3-D glasses used for the PWT are electronic and produce much better 3-D viewing than the disposable glasses from movie theaters.

Figure 4. Late time snapshot from a 1.7 billion cell 3-D RAGE simulation run on 32,000 Cielo processors depict turbulence growth in an ICF capsule with a 5% imposed asymmetry on the laser drive. In this view the plastic inertial drive shell has been removed and only the central fuel region is visible. The white surface is the plastic/fuel interface. The total vorticity in the interior of the fuel is displayed in a volume-rendered representation that reveals the dynamical evolution of the vortex rings to a fully turbulent state for the fuel. This snapshot was rendered using the EnSight 10 software and the ViewMaster 2 visualization cluster. Image courtesy of Vincent A. Thomas and Robert J. Kares

Many improvements enhance the new PWT. It has 16 times the number of pixels as a movie theater. The pixels detail and clarity that far surpasses that seen in movie theaters. The combination of high resolution and smooth imagery are needed for scientific visualization and makes this Powerwall so special. The PWT is a refracting screen created by sandwiching Steward Film Starglass 60 between two pieces of glass. The projectors are behind the glass sandwich. The PWT is a blended projected surface. There are areas where several projectors are projecting at the same time. The transition between a pair of projected images is smoothly blended, leading to a much better image. Two projectors project an image on each space to provide redundancy. This is a vast improvement over the past PWT, which created a hole in the image if one of the projectors was not operating. Self-calibrating projectors use a projector cluster to calibrate color, and a camera system to align lines. This saves a great deal of time compared with manual calibration and gives a much superior calibration. The projectors use red, green and yellow LED bulbs that will last the life of the projectors. The PWT has much more flexibility in input handling by using the Christie Spyder video-input handler / pixel buffer / image processor. The system supports a Yellow-Network system, a X-LAN system, Viewmaster 2, an improved quality document camera and digital sound.

LANL’s scientific work has been impacted by the ability to use visualizations and facilities for science. Examples include end-to-end calculations to investigate asteroid dynamics and impacts, simulations of turbulence growth in an inertial confinement fusion (ICF) capsule, and interactions with glove box ergonomics.

Laboratory organizations that participated in the Powerwall project include High Performance Computing (HPC), X-Computational Physics (X-CP), Network and Infrastructure Engineering (NIE), Security Operations (SO), Environment, Safety, and Health Deployed Services (DESH), Acquisition Services Management (ASM), Information Resource Management (IRM), and the Chief Financial Office (CFO). NNSA funded the work, which supports the Lab’s mission areas and the Information, Science, and Technology science pillar. Technical contact: Laura Monroe

LANSCE

Lujan Center featured in book on techniques to probe the structure of materials

Lujan Neutron Scattering Center scientist Katharine Page (LANSCE-LC), with co-authors from Oak Ridge National Laboratory and the University of Erlangen in Germany, wrote a chapter in Modern Diffraction Methods. The book describes the benefits of a variety of advanced diffraction techniques for understanding a diversity of materials.

In “Structure of Nanoparticles from Total Scattering,” chapter 3, Page and co-authors illustrate how defects in the arrangement of nanocrystalline particles can affect the behavior of materials. They review the fundamentals of total scattering experiments using X-rays or neutrons, introduce structure-modeling approaches for nanoparticle systems, and provide examples (a few from the Neutron Powder Diffractometer, NPDF, at the Lujan Center). The authors predict that the pair distribution function approach will contribute significantly to the structural description of nanomaterials and inform experimental and theoretical efforts to apply and understand their properties in the coming decade.

Figure 5. Cover of the book.

In recent years, the use of total scattering has grown as an important tool to study the local structure of materials. This is in contrast to the long-range average structure obtained by more traditional crystallographic methods based on Bragg peaks. Many times the defects that are revealed using total scattering hold the key to the material properties of interest. Local structure is particularly important when studying nanoparticles, which have a length scale intermediate between amorphous and extended crystalline materials, and exhibit a large number of atoms on the surface relative to the core.

Reference: Page, K. L., Proffen, T., and Neder, R. B. 2012 “Structure of Nanoparticles from Total Scattering.” In Modern Diffraction Methods, Eds. E. J. Mittemeijer and U. Welzel, pp. 61-87. Weinheim, Germany: Wiley-VCH Verlag GmbH & Co. KGaA.

The DOE Office of Basic Energy Sciences funds the Lujan Neutron Scattering Center at the Los Alamos Neutron Science Center. The work supports the Lab’s Energy Security mission area and the Nuclear and Particle Futures and Science of Signatures science pillars. Technical contact: Katharine Page

Materials Science and Technology

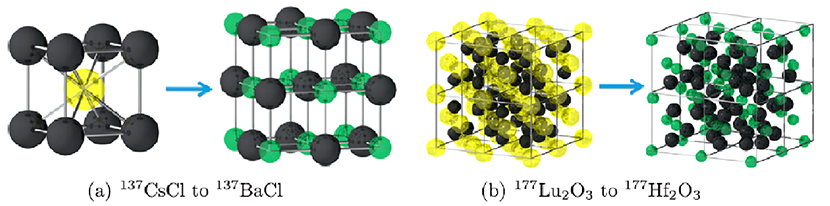

Reverse engineering nuclear waste design

In what New Scientist magazine described as an “ambitious” approach to managing nuclear waste, Laboratory researchers are investigating the potential to reverse-engineer waste forms that become more stable over time.

The process was explained in a New Scientist article describing a number of possible waste management solutions. The LANL research described is part of the project “Radioparagenesis: Robust Nuclear Waste Form Design and Novel Material Discovery,” led by Chris Stanek (Materials Science in Radiation and Dynamics Extremes, MST-8), which aims to answer a fundamental materials science question regarding the impact of daughter product formation on the stability of solids comprised of radioactive isotopes. By answering this question, a predictive capability could be established to design radiation tolerant and chemically robust nuclear waste forms. This could remove a significant obstacle limiting the expansion of nuclear energy. The project has predicted that transmutation may be a unique synthesis route allowing for the formation of compounds, which are often unconventional (e.g., rocksalt BaCl from the decay of 137Cs in CsCl), due to the chemical transmutation that occurs during radioactive decay, or “radioparagenesis,” as the researchers call the phenomenon. By understanding the chemical evolution a waste form may undergo, more robust waste forms can be designed. Furthermore, the work has also resulted in new computational tools that with greater reliability predict the performance of waste in a repository setting.

Figure 6. Two examples of DFT (density functional theory) predictions of radioparagenesis, where (a) describes the formation of rocksalt BaCl from the decay of 137Cs in CsCl, and (b) describes the formation of bixbyite Hf2O3 from the decay of 177Lu in Lu2O3. In both examples, the yellow atoms refer to the parent atom, green to the daughter, and black to stable lattice anions. The team recently performed the 177Lu2O3 experiment at LANL.

The challenge of validating the predictions made is that the “short-lived” fission products of interest from a waste disposal perspective have 30-year half-lives, which would require experiments of 200-year duration. Therefore, the research team developed an accelerated chemical aging approach that relies on synthesis of samples comprised of very short-lived isotopes made by the Lab’s Isotope Production Facility at LANSCE. The scientists performed an accelerated aging experiment involving 177Lu2O3, which has a 6-day half-life. Although 177Lu is not a fission product of interest for waste disposal, its decay does mimic that of important fission products 137Cs and 90Sr because its daughter product (Hf) is considerably different chemically. To minimize the samples’ radioactivity, the team used very small samples and relied on TEM (transmission electron microscopy) characterization. Initial results suggest that transmutation does indeed defy intuition and may result in novel phase formation.

References: “Eternal Challenge: Are We Getting Any Closer to Curing Nuclear Energy’s Biggest Headache?” New Scientist 220, 2941, 42 (Nov. 2013). Amanda Mascarelli authored the article.

“Accelerated Chemical Aging of Crystalline Nuclear Waste Forms,” Current Opinion in Solid State and Materials Science 16, 126 (2012). Researchers include C. R. Stanek and B. P. Uberuaga (MST-8), B. L. Scott and R.K. Feller (Materials Synthesis and Integrated Devices, MPA-11), and N. A. Marks (Curtin Institute of Technology).

Laboratory Directed Research and Development (LDRD) funded the work, which supports the Lab’s Energy Security mission area and Materials for the Future science pillar. Technical contact: Chris Stanek

Physics

First measurements will advance turbulence models

In research featured on the cover of Journal of Fluid Mechanics, an interdisciplinary Los Alamos team took a series of first-time measurements, providing new insights for turbulence modelers. Variable-density turbulence models are widely used in computer simulations at the Laboratory for many applications.

Turbulent mixing has important consequences for supersonic engines, inertial confinement fusion reactions, and supernova explosions. Richtmyer-Meshkov (RM) instabilities are created when a shock wave interacts with fluids of different densities. These instabilities can cause different outcomes. In supersonic engines RM enhances combustion efficiency by blending the fuel and the oxidizer. In inertial confinement fusion (ICF) reactions, the mixing induced by the RM instability (created by a converging shock wave on the fuel-shell interface) can contaminate fuel and impair fusion yield. Scientists have attributed the patterns observed in supernova explosions and ejecta from shock-induced metal melt to RM instability.

The team directly measured terms in turbulence model equations, providing insights into the global nature of the mixing (e.g., faster mixing near the edges of the turbulent fluid layer when compared with the core) and identifying the dominant mechanisms governing the flow evolution. These terms are needed for accurate models.

Figure 7. The image on the journal cover is an instantaneous, quantitative map of concentration of the heavy gas sulfur hexafluoride (SF6, in white) as it mixes into the lighter gas (air, in black). The flow structure evolves in time and rapidly begins to mix as it moves from left to right on the image.

The researchers took high-resolution mean and fluctuating velocity and density field measurements in an RM flow, which was shocked and reshocked, to understand production and dissipation in a two-fluid, developing turbulent flow field. An unstable array of initially symmetric vortices induced rapid material mixing and created smaller-scale vortices. After reshock, the flow transitioned to a turbulent state. The team used planar measurements to probe the developing flow field. They took the first experimental measurements of the density self-correlation and terms in its evolution equation.

Diagnostic advances in the shock tube over the last decade have made possible the simultaneous measurement of both density and velocity on a plane. This capability permits experimental estimation of the net result of complex physics, such as a state of the flow at a given time, as well as the individual terms in model equations that are used to predict the evolution of turbulence and mixing. These results provide insights into the nature and mechanisms of mixing in RM turbulence at low Mach numbers, and yield the first measurements of key quantities in turbulence models developed to tackle these types of flows. The team plans to simultaneously measure density-velocity at multiple times to probe the temporal evolution of the quantities and physics of turbulent mixing.

Reference: “Evolution of the Density Self-correlation in Developing Richtmyer-Meshkov Turbulence,” Journal of Fluid Mechanics 735, 288 (2013); doi: 10.1017/jfm.2013.430. Authors include Chris Tomkins (Applied Modern Physics, P-21), Balakumar Balasubramaniam, Greg Orlicz, and Kathy Prestridge (Neutron Science & Technology, P-23); and Raymond Ristorcelli (Methods and Algorithms, XCP-4).

The NNSA Science Campaign 4: Secondary Assessment Technologies (Kim Scott, LANL Program Manager) funded the work. The research supports the Lab’s Nuclear Deterrence and Energy Security mission areas and the Nuclear and Particle Futures science pillar. Technical contact: Chris Tomkins

Theoretical

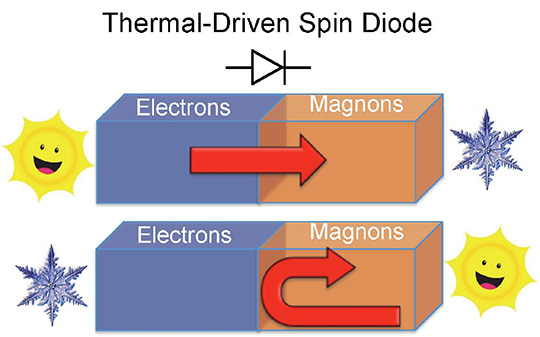

Using waste heat to drive a spin diode and transistor

Energy waste is a severe bottleneck in the supply of sustainable energy to any modern economy. Besides developing new energy sources, the global energy crisis can be alleviated by re-utilizing the wasted energy. Because about 90 percent of the world’s energy utilization occurs in the form of heat, effective heat control and conversion becomes critical. Jie Ren (Physics of Condensed Matter and Complex Systems, T-4) proposed the first mechanism for building a spin diode (conducts spin current in the forward direction, but insulates in the backward one) and transistor that are driven by waste heat. The journal Physical Review B published the research as a Rapid Communication.

This research provides a novel means of thermal energy utilization. It might enable construction of a quantum spin computer driven by waste heat, rather than electric current. Waste heat is widespread. Most information transport and energy conversion produce waste heat due to the second law of thermodynamics. For example, charge transfer produces waste heat by Joule heating in the chips of computers.

Ren predicted new phenomena of the spin Seebeck effect (SSE), a phenomenon that temperature bias can produce a spin current and an associated spin voltage across a metal-insulating magnet interface. The spin Seebeck effect has been observed in various magnetic interfaces. SSE presents a new method of facilitating the functional use of heat and opens a new possibility of spintronics (coupled electron spin and charge transport in condensed-matter structures and devices) and spin caloritronics (interaction of spins with heat currents). The newly uncovered phenomena are the rectification and negative differential SSEs.

Figure 8. Sketch of a spin Seebeck diode. (Top panel): When the left end of the diode is at a higher temperature as compared with its right counterpart, spin current is allowed to flow almost freely. (Bottom panel): In contrast, when the right end is made hotter than the left, the conduction of spin current becomes strongly diminished.

He showed that reversing the thermal bias gives asymmetric spin currents, and increasing thermal bias gives an anomalously decreasing spin current. The conjugate-converted thermal-spin transport is assisted by the exchange interaction at the interface, between conduction electrons in the metal lead and localized spins in the insulating magnet lead. He also uncovered the rectification of the spin Peltier effect [the phenomena that reversing spin voltage gives asymmetric energy (heat) flow]. These intriguing properties are essential for building a spin Seebeck diode and transistor, which is driven by waste heat. Ren demonstrated that the non-smooth strongly-fluctuated electron density of states is crucial for the nontrivial rectification and negative differential spin Seebeck effect. He exemplified the principle in several typical cases of low-dimensional nano-systems.

Ren’s theory readily renders analytic interpretations and physical insights of the microscopic mechanism of spin Seebeck effect across magnetic interfaces, which can provide further guidance for the optimization of the predicted thermal-driven spin diode and transistor effects in the future. It paves the way to utilize waste heat to drive spin/magnon diode and transistor and to design heat-driven spin logic gates and memory. His findings are also applicable to the interfaces of metal-magnetic metal/semiconductor or the ferromagnetic metal-magnetic insulator interfaces. New unexpected phenomena related to spin Seebeck effect await discovery. Finding them and uncovering the microscopic mechanism will offer new opportunities for spintronics, spin caloritronics, and magnonics (collective spin excitations in magnetically ordered materials) to achieve smart control of energy and information in functional devices. The phenomena predicted in Ren’s research advance our microscopic understanding of spin Seebeck effect and open new opportunities and insights for designing an efficient spin/magnon diode and transistor driven by waste heat for practical applications. Ren suggests that a quantum computer without electric power might be possible.

Reference: “Predicted Rectification and Negative Differential Spin Seebeck Effect at Magnetic Interfaces,” Physical Review B 88, 220406 (R) (2013); doi: 10.1103/PhysRevB.88.220406.

Laboratory Directed Research and Development (LDRD) funded the work, which supports the Lab’s Energy Security mission area and the Materials for the Future science pillar. Technical contact: Jie Ren