One byte at a time

Codes, models, and simulations inform computational physicists’ work.

- Jill Gibson, Communications specialist

Jimmy Fung, the Integrated Physics Codes director at Los Alamos National Laboratory, explains computational physics over a cup of coffee. “Consider everything that is happening to a physical system,” he says. “In fact, consider this physical system.” He gestures to the mug of steaming dark roast in his left hand. “Right now, acting on this cup, there are forces, effects and interactions, and the responses of materials. I can use computational physics to predict what will happen when certain variables change, such as the heat, the type of material the cup is made up of, the different chemical compounds within the coffee in the cup.”

Computational physics is a branch of physics that uses computation (calculation of mathematical formulas) to solve complex mathematical equations that describe the fundamental physical principles, laws, and equations that govern the behavior of systems or phenomena.

“All we’re doing is using math to solve science problems,” says Computational Physics division leader Scott Doebling, who also leads the Laboratory’s Advanced Simulation and Computing (ASC) program.

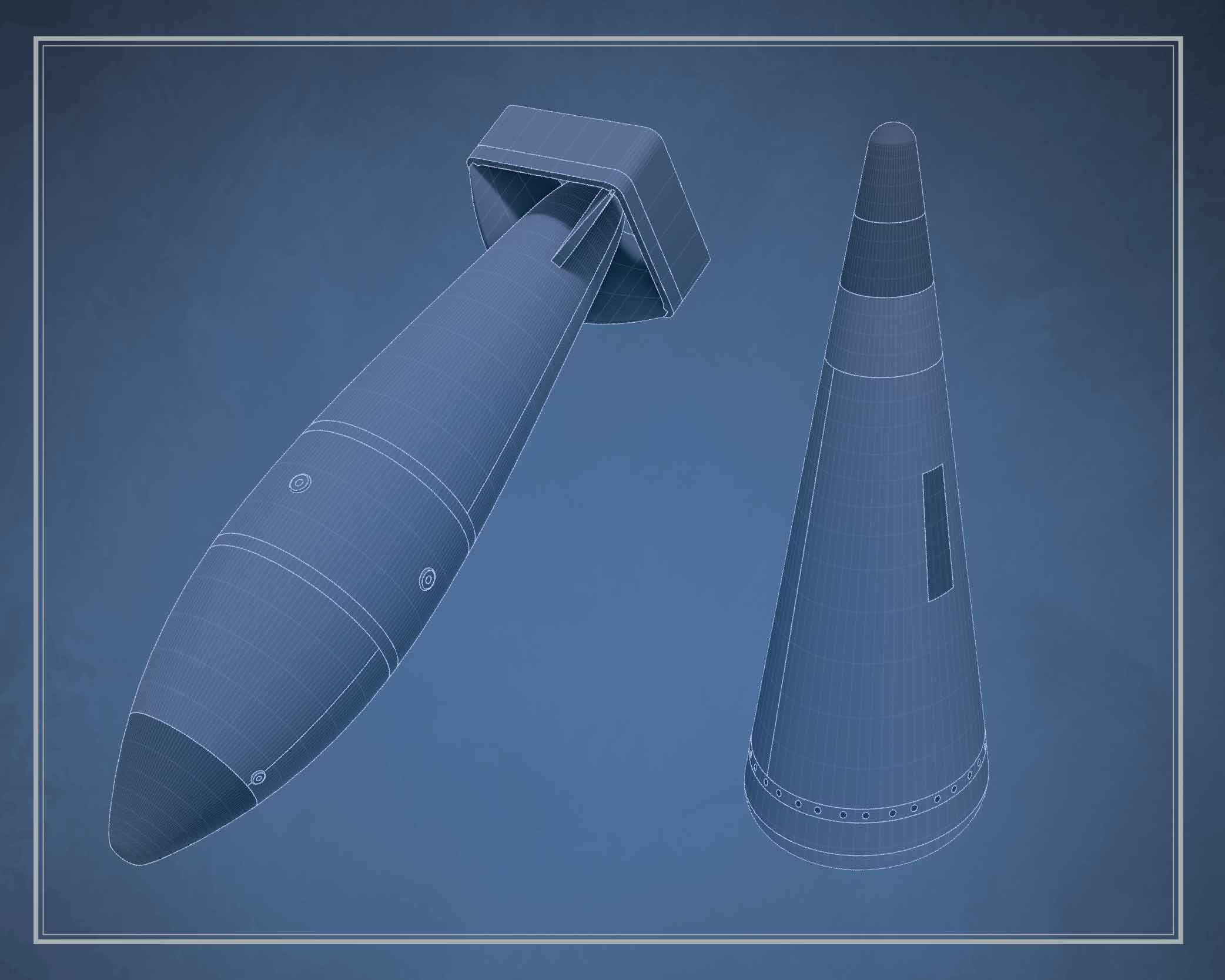

Much of the computational physics work at Los Alamos focuses on the performance and aging of nuclear weapons. Los Alamos is responsible for the design and maintenance of four of the seven types of nuclear weapons in the current stockpile, all of which are decades old. Computational physics allows scientists to predict or analyze how weapons components age and how a weapon could perform if ever detonated—without actually detonating a weapon.

“Los Alamos National Laboratory depends on computational physics to ensure that America’s nuclear deterrent is safe, secure, and effective,” Doebling says. “In many ways, computational physics is the heartbeat of the Lab.”

Models and codes

A computational physicist studying Fung’s coffee would develop relevant physics theories about the coffee and the cup and then build a model to forecast the outcome of certain changes to the cup of coffee. A model is a mathematical representation of a physical system or phenomenon, often described by equations or rules derived from the underlying physics. A model provides the framework or theoretical foundation for understanding a system’s behavior.

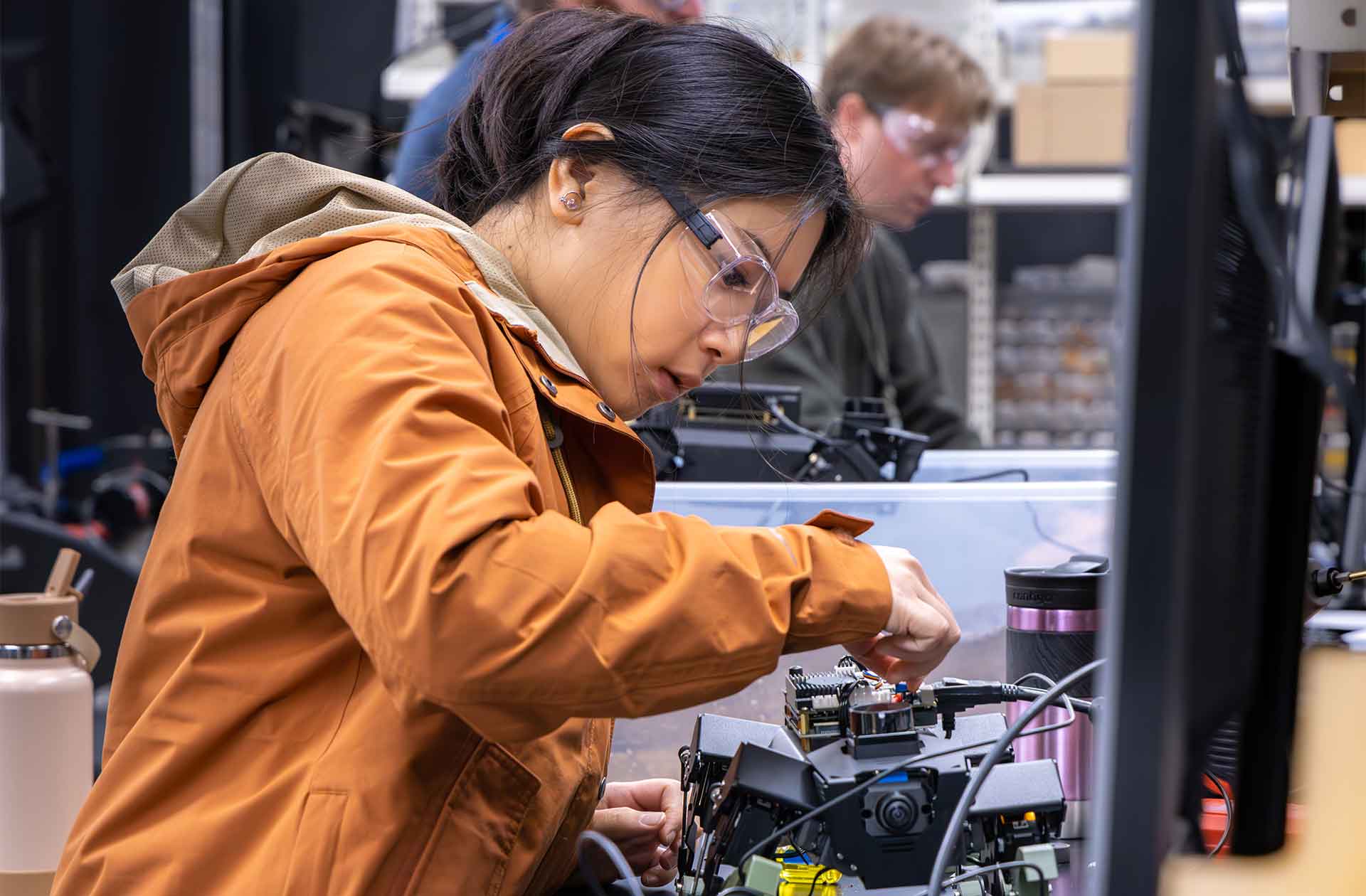

Next, the physicist would create computer codes—scripts written in programming languages (such as C++ and Fortran) that implement the model. A computer code is a set of directions that a computer can follow to perform specific computational tasks. Fung says to develop codes, computational physicists need expertise in mathematics, physics, computer science, and software engineering. Scientists create codes and adapt existing codes to solve problems, make predictions, or simulate physical phenomena. Codes convert the theoretical framework of the model into numerical steps.

“The code itself is the set of instructions that you give to the computing hardware in order to generate that representation of reality,” Charlie Nakhleh, associate Lab director for Weapons Physics, says. “Our codes are remarkably complicated things that encapsulate, depend upon, and tie together multiple different models.”

Codes allow scientists to automate and scale up complex calculations that involve multiple variables. They interact with hardware and software to perform computations—making calculations, solving equations, or processing data.

“Codes are built by taking mathematical equations and discretizing them—transforming continuous equations into discrete forms—separate parts where variables have set values at specific points or intervals,” Fung says. This key technique makes it possible for computers to solve equations.

Nakhleh says what goes on inside a human brain is not so different from what happens inside a computer. “The only way the human brain has ever been able to understand the world in which it lives and operates is to break it down into pieces, right? Complex reality is too much for anybody. So, what you do is you break it down into pieces, you understand the pieces, you analyze the problem, and then you tie it back together. And that's what a computer code does in terms of its simulation. It breaks this representation of reality down into a bunch of pieces, factors out these pieces into different parts of the computer code, and then ties them all back together to generate a simulation of what its view of reality is.”

Codes change as they are adapted and rewritten, and some are discarded in favor of newer codes. Fung estimates that over the years the Lab has created more than 100 codes. “New software practices and new computer languages emerge that lead scientists to write new codes or rewrite old ones to take advantage of those new practices,” Fung says. “Codes merge or split off, sometimes due to technological and scientific advances, and sometimes due to mission, organizational, and cultural changes,” he adds.

Some of the most-used codes can be grouped by the method or approach they use. For example, Eulerian codes are based on observing a system at fixed points; Lagrangian codes track individual particles or elements as they move; Arbitrary Lagrangian-Eulerian (ALE) codes combine aspects of both Eulerian and Lagrangian methods; and Monte Carlo codes use random sampling to solve problems. The first Monte Carlo code was developed at Los Alamos in the 1940s to predict how radiation particles move through and interact with other materials. A variation of that code remains in use today.

Simulations

Nuclear weapons are more complicated (and much more energetic) than Fung’s cup of coffee, but both can be studied using simulations. Simulations are the results of applying a model to generate digital representations that replicate, analyze, or predict the behavior of systems where multiple physical phenomena interact simultaneously. Simulations can be any type of visualization, animation, graph, dataset, or other representation that offers insights into a system. Although these results are often expressed strictly numerically, many can be translated into graphics and shared using large 2D and 3D displays. Simulations can take days, weeks, or even months to run.

Running a simulation requires a supercomputer, a computer designed to handle large datasets at extreme speeds. At Los Alamos, teams of humans and teams of computers work together to solve problems. “It’s the computational physicist’s job to understand how physics and computation come together and then deliver these simulation capabilities,” Fung says.

Los Alamos scientists can explore 3D visualizations of simulations using facilities called the Powerwall Theater and the La Cueva Grande SuperCAVE (Cave Automatic Virtual Environment). The Powerwall is a movie-theater-size screen connected to the Lab’s high-performance computers. In a movie-theater-size room, it displays stereo 3D images of color-coded simulations. Using the Powerwall, scientists can view animated processes, zoom in and out, and rotate graphics.

“People want to get together and discuss what they are working on,” says Dave Modl, a visualization engineer who supports the facility. He notes that training, meetings, and VIP visits often make use of the Powerwall.

Bob Greene, a computational physicist, works with his colleagues to prepare the visualizations. “The Powerwall brings scientists together in one room and literally allows them to get the big picture using complex 3D visualizations that illustrate the phenomena they are studying,” he says.

While the Powerwall is designed to serve large groups of people, the CAVE was created for only a few users at a time. Scientists can step into this 3D display and stand inside their simulations. “One at a time, they can interact with the display wearing tracking glasses and see the perspective as they move in space,” Modl says.

Both Modl and Greene say they stay busy providing support and expertise for these facilities. “The Powerwall and the CAVE offer computational physicists an important way to view their simulations and explore their data in high resolution,” Greene notes.

The importance of data

Data is the information that scientists feed into the computer. When it comes to nuclear weapons, data comes from two sources: the more than 1,000 full-scale nuclear tests America conducted before the 1992 testing moratorium and the experiments scientists now conduct across the nuclear security enterprise (many of them at Los Alamos). Data allows scientists to validate models and codes to ensure the resulting simulations are correct.

“If you can generate a simulation that matches the historical test database, then you’re going to have a very high degree of confidence that you’ve got an adequate representation of reality,” Nakhleh says.

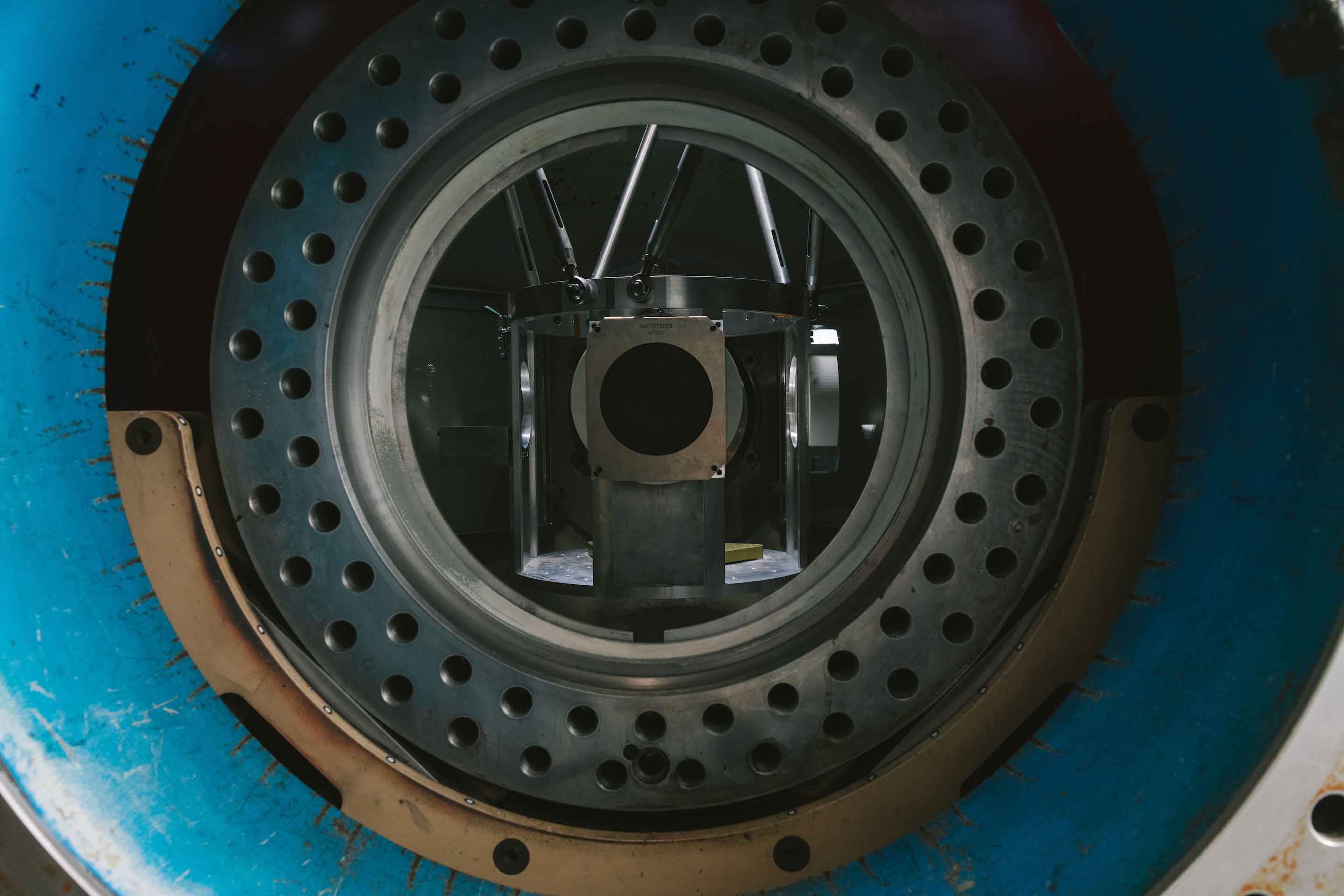

Validation also comes from current experiments, conducted at facilities including Los Alamos’ Dual-Axis Radiographic Hydrodynamic Test (DARHT) facility, Lawrence Livermore National Laboratory’s National Ignition Facility (NIF), and Sandia National Laboratories' Z Pulsed Power Facility.

Bob Webster, Los Alamos deputy director for Weapons, says such data is essential for validating the Lab’s computer models and codes. “We use experiments at NIF or Z or DARHT to continue to validate the models and codes that we have,” he says. “We can take those models and codes that we validated and put them together in a deductive sense in these giant high-performance computing environments and evaluate the logical consequence of the theories that we've proved out in these other regimes.”

Doebling says the ongoing experiments give researchers more confidence in their ability to create computer simulations that predict weapons behavior in many areas and study everything, including thermodynamics, fluid dynamics, mechanical properties, and the way materials age. Those simulations feed back into experiments. “We use simulation to design experiments. We use those experiments to give us data to determine whether we are getting the right answers,” Doebling says. “We have lots of experiments to help tell us where our models and codes are right and where they are not.”

Verifying and validating is essential, according to Doebling. “You have to ask yourself, ‘Is my answer right?’ That’s always been a challenge when doing math, but doing large-scale computing just kicks that up because there are so many ways you can get things wrong. We are looking at things like aging of materials where the phenomena are complicated. We need more data from more experiments to ensure we are getting things right.”

The evolution of computational physics

Computational physics was born at Los Alamos during the Manhattan Project. “In 1943, physicists needed to do a job,” Doebling says. “They needed to invent the technology to win the war.” That meant developing new ways to perform calculations used to understand and predict the complex physics of nuclear reactions.

During World War II, these calculations were initially performed by people, often the wives of male physicists and members of the Women’s Army Corps, who were called computers. Scientists then augmented the abilities of human computers with IBM accounting machines. “These were some of the largest and most sophisticated simulations ever conducted at that time,” explains Lab historian Nic Lewis. “Los Alamos pushed the limits of what was computationally possible in the mid-1940s.”

In the decades that followed, as the Cold War escalated and nuclear weapons became more complicated, more complex calculations were needed. “Lab scientists had to invent many of the technologies and methods needed,” Lewis says. “As a consequence, Los Alamos became a key driver in the evolution of electronic computers and the advancement of mathematical models that would become ubiquitous over subsequent decades.”

In 1992, the United States declared a moratorium on full-scale nuclear testing. Because researchers were no longer detonating nuclear weapons to see if they worked, scientists developed computational methods to predict weapons behavior using data from previous full-scale tests. Over the years, researchers added nonnuclear and subcritical experiments that did not generate self-sustaining nuclear reactions.

“To guarantee the reliability of the U.S. nuclear deterrent, vastly improved computational capabilities were clearly needed,” Lewis says. “Much as it did during World War II, Los Alamos spearheaded rapid advancements in computer hardware and simulation software to meet the needs of its national security mission.”

These advances provided (and continue to provide) scientists with the ability to modify weapons to meet military requirements, explore possible new designs, and assess the nuclear stockpile. “Taking care of the weapons—the deterrent of the present and the future—drives us,” Doebling says.

The future of computational physics

The floor of the Strategic Computing Complex at Los Alamos (where the supercomputers sit) is 43,500 square feet, about the size of a football field. Lab leaders say they anticipate that a larger facility will be needed someday. “We will always need newer, better computers to run bigger problems faster,” says High Performance Computing Director Jim Lujan.

Doebling notes that each new supercomputer requires more power and cooling than the last. “As we look toward the future, we have to have the power and the facilities to handle the computers,” Doebling says. “We have to plan 10 to 15 years in the future for the next computing systems.”

That planning requires responding to ongoing changes in the computing industry. “Computers are changing, and Los Alamos is having to adapt to industry changes in computing,” says Fung, noting that computer languages, algorithms, computer components, and approaches to physics are always changing, requiring continuous adaptations at the Lab.

“We are designing better algorithms, which represents the way we implement the math in the computer programs,” Doebling says. “We are also tailoring software to hardware and hardware to software to make them work faster as a system.” This approach, called codesign, makes the calculations faster, more reliable, and more repeatable, he says.

And then of course, there is artificial intelligence (AI)—but Doebling is optimistic about the impacts of this new technology. “AI is just another name for a set of mathematical techniques used to analyze data,” he says. “That’s what we do in computational physics, we use math to analyze data and to predict the way things will happen, and much of AI is just a different take on things we already know how to do. But other capabilities of AI lead us to think about things we never thought would be possible, things that could revolutionize computational physics and even our overall mission.”

Doebling predicts that AI will accelerate workflows by automating processes and will lead to the development of improved models and the verification and validation of the predictions that result. “We need to harness the power of AI while maintaining our expertise on the physics,” he says.

The mission is the boss

“The mission is the boss,” is a statement Doebling often makes. He says that means every new development in high-performance computing and computational physics at Los Alamos is driven by the Lab’s national security mission. “We want to enable a future for the nuclear security mission that’s not only vibrant and secure but also agile and efficient in the face of ever-evolving challenges,” he says.

Fung seconds that goal. “The changing geopolitical situation may have implications for military requests, which may impact weapons design and simulation,” he says. “Weapons designers and computational physicists must work together very closely, particularly as mission applications and design practices evolve.”

That’s why both Fung and Doebling stress the importance of ensuring that the Lab maintains cutting-edge technology and hires technical experts who are willing to push the boundaries to get the answers they need.

“Computational physics plays a key role in ensuring our nuclear deterrent is safe, secure, and effective,” Fung says. “That’s incredible and adds to the satisfaction of working here.” ★