HPC-Systems runs the production scientific computing resources for Los Alamos National Laboratory, operating and maintaining some of the fastest supercomputers in the world for the betterment of our nation and the world; and now building the infrastructure for the next generation of Artificial Intelligence, Machine Learning and Large Language Models.

HPC Systems

Computing News

All News Computing

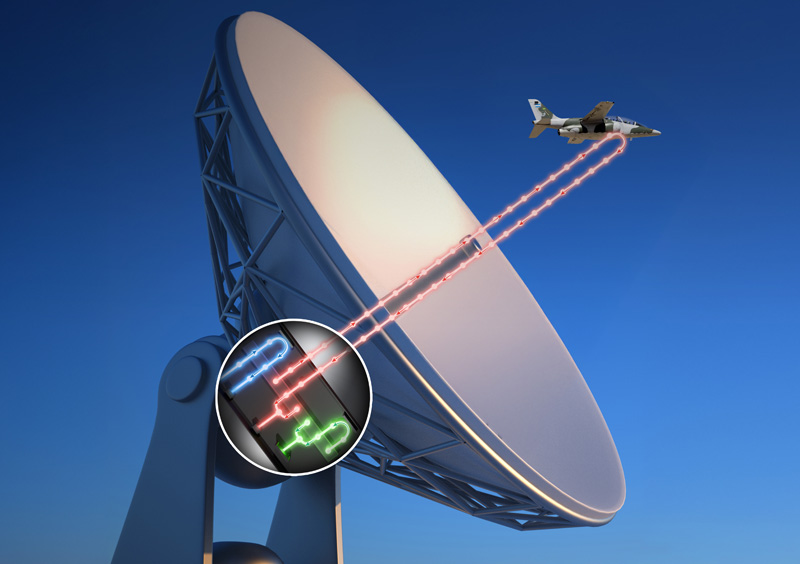

ComputingQuantum remote sensing approach overcomes quantum memory problem

Path identity the key to scheme that can unlock national security and communications applications

Computing

ComputingUniversity of Michigan, Lab to jointly develop Michigan-based AI research center

This U-M press release highlights how a partnership expands research collaboration

Computing

ComputingAI algorithms deployed on-chip reduce power consumption of deep learning applications

Brain-inspired algorithm on neuromorphic hardware reduces cost of learning

Teams

Cybersecurity

The Cybersecurity team uses policies and best practices to implement state of the art cybersecurity tools onto our High Performance Systems. The team's challenge is minimal impact to our users scientific applications while still providing effective monitoring and security.

Monitoring

The Monitoring Team is responsible for monitoring everything within the HPC Data Centers, including Facilities, Clusters, File Systems, Networking and Support Servers. Monitoring data, sensor information and system logs are collected and transported throughout our extensive monitoring infrastructure. System data is analyzed and displayed with specific alerts created for each team.

Platforms

The Platforms Team operates and maintains some of the largest supercomputers in the world. A Team of highly skilled personnel that specialize in both the hardware and software aspects of High Performance Computing.

Support Services

Our Support Services Team deploys and maintains our existing and future on-prem cloud-like systems. They provide resources for both our internal HPC teams and external users by building and maintaining Red Hat Virtualization (RHV) infrastructure, user-facing Openshift clusters, services such as Gitlab, and our AI/ML/LLM Nvidia DGX Pods.

Our Science/Capabilities

Primary Expertise

- Integration of Cybersecurity tools

- Vulnerability management

- STIG compliance

- Splunk administration

- Splunk dashboard and alerting

- RabbitMQ

- Kafka

- High Performance Computing system administration

- Cray system management

- TOSS administration

- RHEL administration

- Openshift and Kubernetes container orchestration

- DGX Pods

Current and Emerging Strategic Thrusts

- Non-instrusive cybersecurity monitoring of HPC networks

- Holistic Data Center monitoring

- Integration of large HPC systems

- AI/ML/LLM infrastructure using GPU/DGX systems